使用 Langfuse 监控和跟踪您的 Haystack 管道

借助新的 Langfuse 集成,比以往任何时候都更容易了解管道的性能。

2024年5月17日将您的 LLM 应用投入生产是一个重要的里程碑,但这仅仅是开始。至关重要的是要监控您的管道在实际世界中的表现,以便您可以持续改进性能和成本,并主动解决可能出现的任何问题。

借助新的 Haystack Langfuse 集成,现在比以往任何时候都更容易了解您的管道。在这篇文章中,我们将详细介绍 Langfuse,并演示如何跟踪到 Haystack 管道的端到端请求。

什么是 Langfuse?

Langfuse 是一个开源的 LLM 工程平台。它提供了大量功能,可以帮助您了解 LLM 应用在后台的运行情况。

Langfuse 的功能和优势

- 跟踪模型使用和成本

- 收集用户反馈

- 识别低质量输出

- 构建微调和测试数据集

- 开源 💙

- 提供自托管版本

- 频繁发布新功能和改进

- 截至撰写本文时,可免费试用 🤑

开始使用

要使用此集成,您需要注册一个 Langfuse 账户。有关功能和定价的最新信息,请参阅Langfuse 文档。

前提条件

首先,请在 Langfuse 网站上注册一个账户。

在 Langfuse Dashboard 上,记下您的 LANGFUSE_SECRET_KEY 和 LANGFUSE_PUBLIC_KEY。将它们设置为环境变量。同时,将 HAYSTACK_CONTENT_TRACING_ENABLED 环境变量设置为 true,以启用管道中的 Haystack 跟踪。

以下代码示例还需要设置 OPENAI_API_KEY 环境变量。Haystack 是模型无关的,您可以通过更改以下代码示例中的生成器来使用我们支持的任何模型提供商。

安装

要安装集成,请在终端中运行以下命令

pip install langfuse-haystack

要在管道中使用 Langfuse,您需要一些额外的依赖项

pip install sentence-transformers datasets

在 RAG 管道中使用 Langfuse

首先,导入所有需要的模块。

from datasets import load_dataset

from haystack import Document, Pipeline

from haystack.components.builders import PromptBuilder

from haystack.components.embedders import SentenceTransformersDocumentEmbedder, SentenceTransformersTextEmbedder

from haystack.components.generators import OpenAIGenerator

from haystack.components.retrievers import InMemoryEmbeddingRetriever

from haystack.document_stores.in_memory import InMemoryDocumentStore

from haystack_integrations.components.connectors.langfuse import LangfuseConnector

接下来,编写一个函数,该函数接受一个 DocumentStore 并返回一个 Haystack RAG 管道。将LangfuseConnector添加到您的管道中,但不要将其连接到管道中的任何其他组件。

def get_pipeline(document_store: InMemoryDocumentStore):

retriever = InMemoryEmbeddingRetriever(document_store=document_store, top_k=2)

template = """

Given the following information, answer the question.

Context:

{% for document in documents %}

{{ document.content }}

{% endfor %}

Question: {{question}}

Answer:

"""

prompt_builder = PromptBuilder(template=template)

basic_rag_pipeline = Pipeline()

# Add components to your pipeline

basic_rag_pipeline.add_component("tracer", LangfuseConnector("Basic RAG Pipeline"))

basic_rag_pipeline.add_component(

"text_embedder", SentenceTransformersTextEmbedder(model="sentence-transformers/all-MiniLM-L6-v2")

)

basic_rag_pipeline.add_component("retriever", retriever)

basic_rag_pipeline.add_component("prompt_builder", prompt_builder)

basic_rag_pipeline.add_component("llm", OpenAIGenerator(model="gpt-3.5-turbo", generation_kwargs={"n": 2}))

# Now, connect the components to each other

# NOTE: the tracer component doesn't need to be connected to anything in order to work

basic_rag_pipeline.connect("text_embedder.embedding", "retriever.query_embedding")

basic_rag_pipeline.connect("retriever", "prompt_builder.documents")

basic_rag_pipeline.connect("prompt_builder", "llm")

return basic_rag_pipeline

现在,使用 InMemoryDocumentStore 来实例化管道,以保持简单。根据七大奇迹数据集生成一些嵌入,并将它们填充到我们的文档存储中。如果您在生产环境中运行此代码,则可能需要使用索引管道将数据加载到存储中,但为了演示,这种方法可以降低复杂性。

document_store = InMemoryDocumentStore()

dataset = load_dataset("bilgeyucel/seven-wonders", split="train")

embedder = SentenceTransformersDocumentEmbedder("sentence-transformers/all-MiniLM-L6-v2")

embedder.warm_up()

docs_with_embeddings = embedder.run([Document(**ds) for ds in dataset]).get("documents") or [] # type: ignore

document_store.write_documents(docs_with_embeddings)

运行管道并向其提问。

pipeline = get_pipeline(document_store)

question = "What does Rhodes Statue look like?"

response = pipeline.run({"text_embedder": {"text": question}, "prompt_builder": {"question": question}})

设置 HAYSTACK_CONTENT_TRACING_ENABLED 环境变量会自动跟踪管道运行的每个请求。如果一切顺利,您应该会收到如下输出:

# {'tracer': {'name': 'Basic RAG Pipeline', 'trace_url': 'https://cloud.langfuse.com/trace/3d52b8cc-87b6-4977-8927-5e9f3ff5b1cb'}, 'llm': {'replies': ['The Rhodes Statue was described as being about 105 feet tall, with iron tie bars and brass plates forming the skin. It was built on a white marble pedestal near the Rhodes harbour entrance. The statue was filled with stone blocks as construction progressed.', 'The Rhodes Statue was described as being about 32 meters (105 feet) tall, built with iron tie bars, brass plates for skin, and filled with stone blocks. It stood on a 15-meter-high white marble pedestal near the Rhodes harbor entrance.'], 'meta': [{'model': 'gpt-3.5-turbo-0125', 'index': 0, 'finish_reason': 'stop', 'usage': {'completion_tokens': 100, 'prompt_tokens': 453, 'total_tokens': 553}}, {'model': 'gpt-3.5-turbo-0125', 'index': 1, 'finish_reason': 'stop', 'usage': {'completion_tokens': 100, 'prompt_tokens': 453, 'total_tokens': 553}}]}}

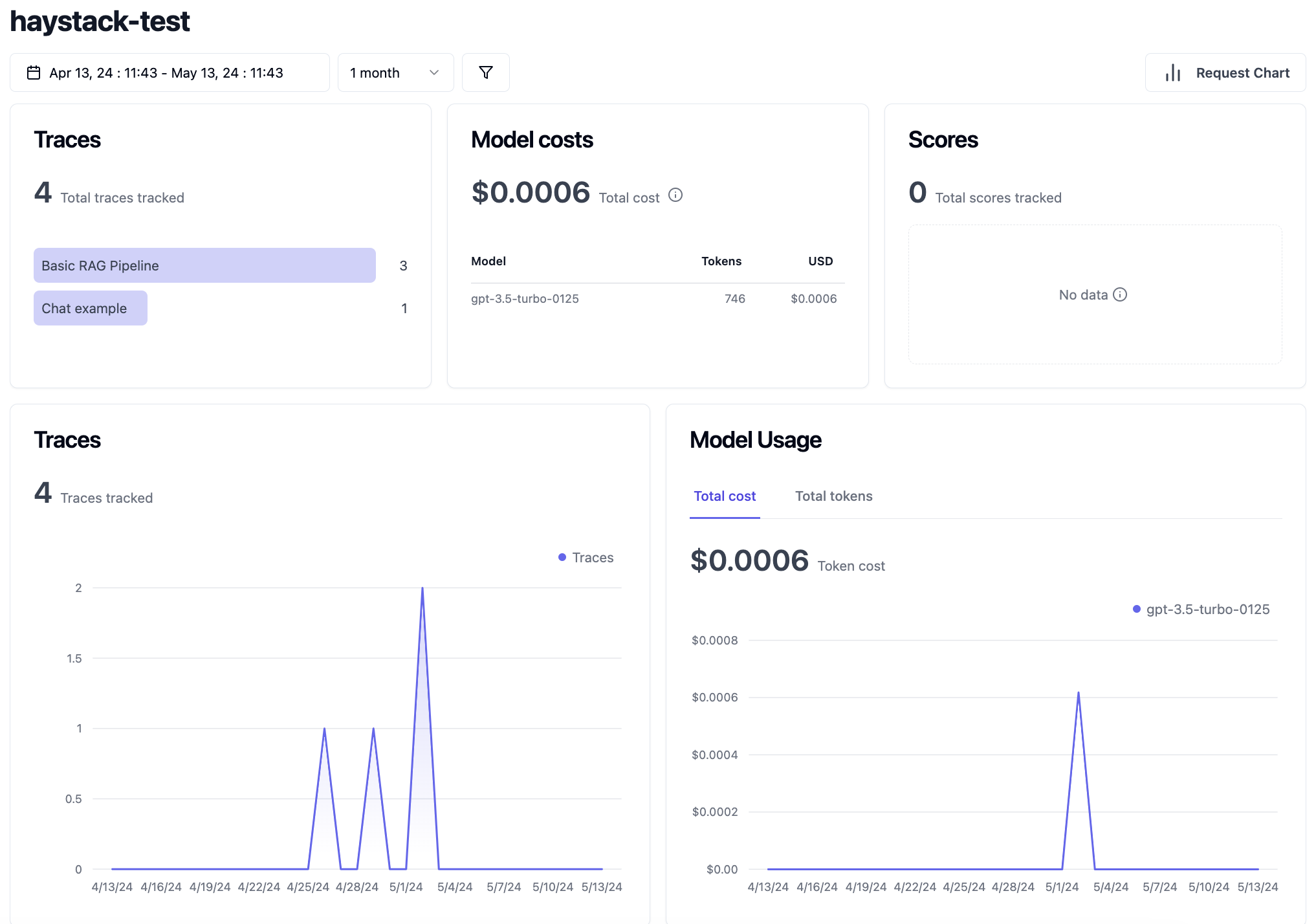

在终端中显示跟踪输出很有趣,但该集成还将信息发送到 Langfuse。Langfuse Dashboard 提供了更全面、更美观的用户界面,以便您更好地理解管道。让我们去看看。

在带聊天的 RAG 管道中使用 Langfuse

Agent 和聊天用例越来越受欢迎。如果您想使用该集成来跟踪包含聊天生成器组件的管道,以下是如何执行此操作的示例。

from haystack import Pipeline

from haystack.components.builders import DynamicChatPromptBuilder

from haystack.components.generators.chat import OpenAIChatGenerator

from haystack.dataclasses import ChatMessage

from haystack_integrations.components.connectors.langfuse import LangfuseConnector

pipe = Pipeline()

pipe.add_component("tracer", LangfuseConnector("Chat example"))

pipe.add_component("prompt_builder", DynamicChatPromptBuilder())

pipe.add_component("llm", OpenAIChatGenerator(model="gpt-3.5-turbo"))

pipe.connect("prompt_builder.prompt", "llm.messages")

messages = [

ChatMessage.from_system("Always respond in German even if some input data is in other languages."),

ChatMessage.from_user("Tell me about {{location}}"),

]

response = pipe.run(

data={"prompt_builder": {"template_variables": {"location": "Berlin"}, "prompt_source": messages}}

)

print(response["llm"]["replies"][0])

print(response["tracer"]["trace_url"])

# ChatMessage(content='Berlin ist die Hauptstadt von Deutschland und zugleich eines der bekanntesten kulturellen Zentren Europas. Die Stadt hat eine faszinierende Geschichte, die bis in die Zeiten des Zweiten Weltkriegs und des Kalten Krieges zurückreicht. Heute ist Berlin für seine vielfältige Kunst- und Musikszene, seine historischen Stätten wie das Brandenburger Tor und die Berliner Mauer sowie seine lebendige Street-Food-Kultur bekannt. Berlin ist auch für seine grünen Parks und Seen beliebt, die den Bewohnern und Besuchern Raum für Erholung bieten.', role=<ChatRole.ASSISTANT: 'assistant'>, name=None, meta={'model': 'gpt-3.5-turbo-0125', 'index': 0, 'finish_reason': 'stop', 'usage': {'completion_tokens': 137, 'prompt_tokens': 29, 'total_tokens': 166}})

# https://cloud.langfuse.com/trace/YOUR_UNIQUE_IDENTIFYING_STRING

探索 Langfuse Dashboard

运行这些代码示例后,请访问 Langfuse Dashboard 来查看和交互式使用跟踪。截至撰写本文时,此演示是免费试用的。

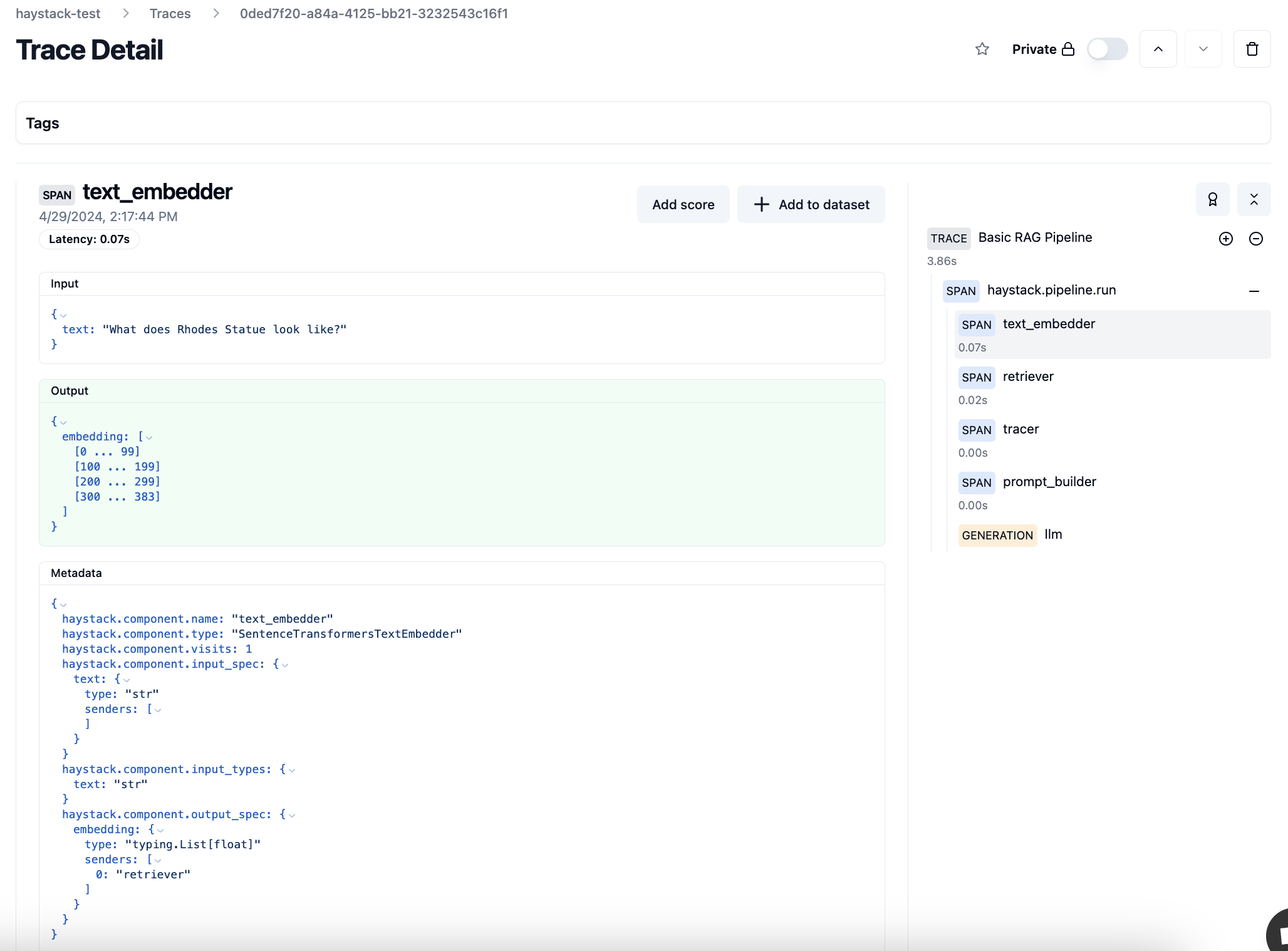

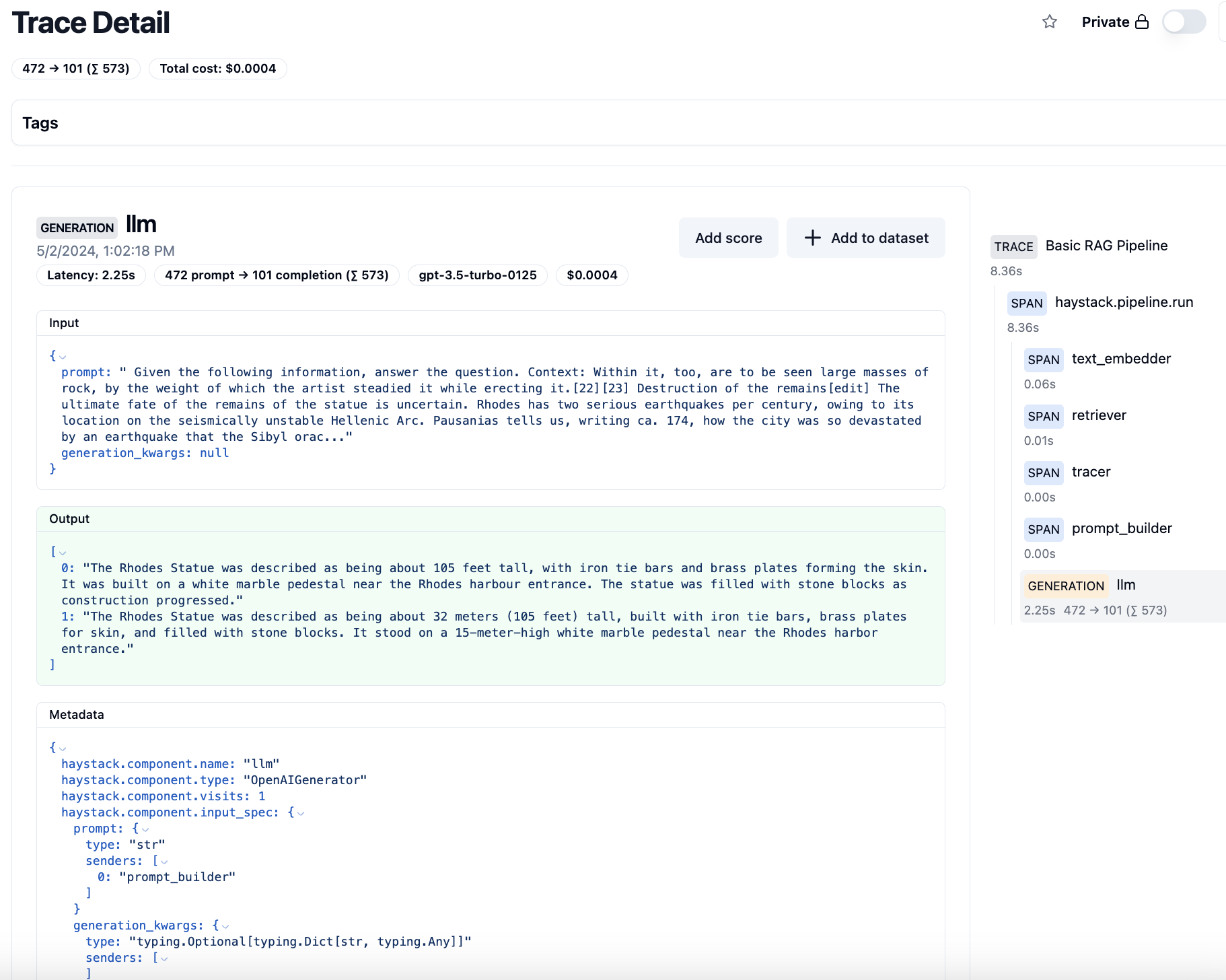

跟踪详情

跟踪详情显示了特定端到端请求的成本和延迟。这些数据对于估算生产中 RAG 应用的使用情况和成本非常有用。例如,这是我们刚刚运行的管道的文本嵌入器步骤的跟踪详情。有关LLM 跟踪的全面解释,请参阅 Langfuse 文档。

右侧边栏显示了管道每个步骤的延迟,这有助于查明性能瓶颈。

标记为“generation”的跟踪详情还显示了请求的货币成本。

跟踪还可以按用户或会话进行分段,因此您可以更精细地了解用户的旅程。

评估

评估有助于我们了解 LLM 应用返回给最终用户的结果的质量。目前有 4 种方法可以将分数添加到 Langfuse:

- 手动评估

- 用户反馈

- 基于模型的评估

- 通过 SDK/API 自定义

为了节省时间,本文仅涵盖手动评估,但有关所有评估方法的全面信息,请参阅Langfuse 文档。

单击跟踪,您可以手动添加分数来记录特定请求的质量。

对于此跟踪,input 显示了我们的提示,并与传递给 LLM 的实际上下文进行了内插。很酷!

Input:

Given the following information, answer the question.

Context:

Within it, too, are to be seen large masses of rock, by the weight of which the artist steadied it while erecting it.[22][23]

Destruction of the remains[edit]

The ultimate fate of the remains of the statue is uncertain. Rhodes has two serious earthquakes per century, owing to its location on the seismically unstable Hellenic Arc. Pausanias tells us, writing ca. 174, how the city was so devastated by an earthquake that the Sibyl oracle foretelling its destruction was considered fulfilled.[24] This means the statue could not have survived for long if it was ever repaired. By the 4th century Rhodes was Christianized, meaning any further maintenance or rebuilding, if there ever was any before, on an ancient pagan statue is unlikely. The metal would have likely been used for coins and maybe also tools by the time of the Arab wars, especially during earlier conflicts such as the Sassanian wars.[9]

The onset of Islamic naval incursions against the Byzantine empire gave rise to a dramatic account of what became of the Colossus.

Construction[edit]

Timeline and map of the Seven Wonders of the Ancient World, including the Colossus of Rhodes

Construction began in 292 BC. Ancient accounts, which differ to some degree, describe the structure as being built with iron tie bars to which brass plates were fixed to form the skin. The interior of the structure, which stood on a 15-metre-high (49-foot) white marble pedestal near the Rhodes harbour entrance, was then filled with stone blocks as construction progressed.[14] Other sources place the Colossus on a breakwater in the harbour. According to most contemporary descriptions, the statue itself was about 70 cubits, or 32 metres (105 feet) tall.[15] Much of the iron and bronze was reforged from the various weapons Demetrius's army left behind, and the abandoned second siege tower may have been used for scaffolding around the lower levels during construction.

Question: What does Rhodes Statue look like?

Answer:

Output:

The Rhodes Statue was described as being about 105 feet tall, with iron tie bars and brass plates forming the skin. It was built on a white marble pedestal near the Rhodes harbour entrance. The statue was filled with stone blocks as construction progressed."

1: "The Rhodes Statue was described as being about 32 meters (105 feet) tall, built with iron tie bars, brass plates for skin, and filled with stone blocks. It stood on a 15-meter-high white marble pedestal near the Rhodes harbor entrance."

]

根据输入和输出,这似乎是一个质量不错的响应。单击“Add score”按钮并给它打 1 分。分数甚至可以编辑,以防您犯错。

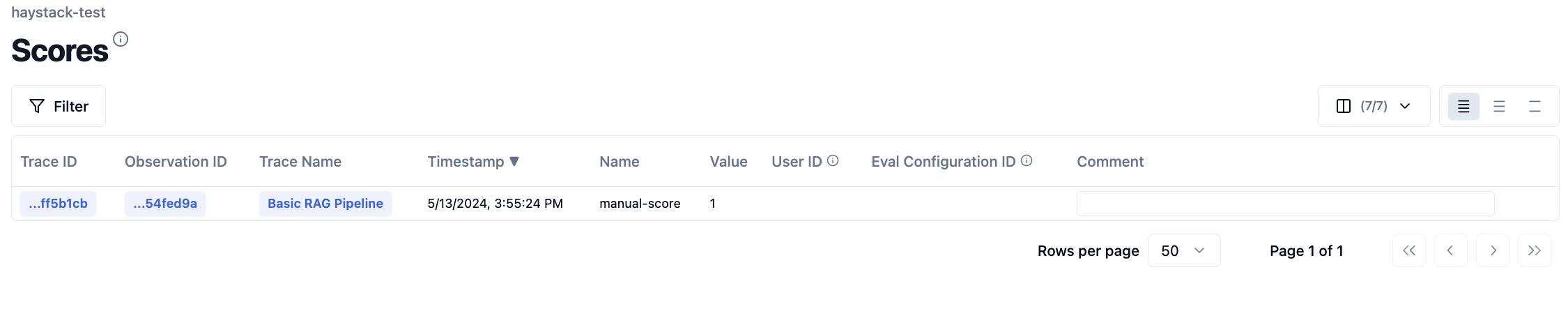

现在单击“Scores”部分,可以看到我们添加的分数。随着时间的推移,这些数据有助于全面了解 LLM 应用的质量。

总结

如果您一直关注,今天您将学到

- Langfuse 如何帮助您更好地了解 Haystack 管道,让您有信心将其投入生产

- 如何将 Langfuse 集成到 Haystack RAG 和聊天管道中

- Langfuse Dashboard 的 LLM 跟踪和评估基础知识

对于一个小团队来说,Langfuse 以惊人的速度发布新功能。我们迫不及待地想看到他们接下来会构建什么。要及时了解未来的更新,请务必在 Twitter 上关注Langfuse 和Haystack。感谢您的阅读!